AI Agents Explained: Opportunities, Risks, and Business Use Cases

Hardly any current report on artificial intelligence gets by without terms like agents, AI agents, or Agentic AI. Search trends on this topic have multiplied since the end of 2024, and practically every major tech company has presented its own frameworks or platforms since the beginning of the year—from OpenAI and Google to Meta.

According to a recent Gartner study, more and more companies are engaging with Agentic AI—while at the same time the analysts predict that over 40% of these projects could fail by 2027 (Gartner 2025). This tension makes it clear: Agentic AI is more than a short-lived fad. At the same time, the question remains open: is it a genuine technological revolution or, in the end, just the next hype that will soon fade?

What tasks can AI agents actually take on, and where are their limits?

These questions are not only exciting for technology enthusiasts. Companies across all industries increasingly face the practical question: how can AI agents be usefully deployed in day-to-day business to automate processes, speed up decisions, or make knowledge more efficiently usable—without losing control, transparency, or security?

In the following sections, we therefore take a closer look at the concept of AI agents: what they are, why they are becoming so relevant right now, which technical foundations underpin them, and where concrete use cases can already be found today. We also address the challenges that come with autonomous AI systems—from technical risks to legal issues to ethical considerations.

In the end, one thing should be clear: between science fiction and real efficiency gains there is only a fine line when it comes to AI agents—and those who understand it can turn the hype into real business value.

Gartner, Inc. (2025). Gartner predicts over 40 percent of agentic AI projects will be canceled by end of 2027.

- 21. October. 2025

2. What Are AI Agents (Agentic AI)?

Definition & How AI Agents Work

An AI agent is a system that perceives its environment, makes decisions independently, and acts purposefully to achieve a specific goal. These three steps—perceive, decide, act—form the core of every agentic system.

Examples from Everyday Life and Business

As abstract as that may sound at first, the principle is quite everyday. A simple example:

A regular thermostat measures the current room temperature (it perceives its environment), compares it with the desired setpoint (it decides), and opens or closes the heating valve to adjust the temperature (it acts). In doing so, it fulfills exactly the three basic principles of an AI agent—even if in a very simple form.

An AI agent therefore always works toward a specific goal that is usually set by a human. This goal can look very different:

- A self-driving car should reach a destination safely.

- A digital assistant should find a meeting time for several people.

- A purchasing agent should automatically place orders when inventory drops.

To achieve the goal, the AI agent “observes” its environment—either physically (like a robot with sensors and cameras) or digitally (for example by accessing files, calendars, emails, or databases). It then compares the current state with the desired target state and independently chooses the next steps.

This process repeats continuously: perception, evaluation, action, and back to the start. With each loop, the agent gets closer to its goal. In the self-driving car example, that means driving on meter by meter until the destination is reached. In the digital world, it means checking calendars, finding open time slots, and automatically sending invitations.

The operating principle is strongly reminiscent of human problem-solving—and that was indeed the original idea behind the concept. As early as the 1950s, researchers in classical AI research described so-called agentic systems: technical entities that, similar to humans, take in information, evaluate it, and act accordingly.

Modern AI agents build on this idea but go far beyond it. Thanks to new technologies they can analyze complex environments, react flexibly to changes, and learn from previous actions. They are therefore no longer just simple rule-based automatons but increasingly versatile digital actors that autonomously take on tasks.

Current research, however, points out that the term “Agentic AI” sometimes repackages an old idea. As Botti (2025) emphasizes in the study “Agentic AI and Multiagentic: Are We Reinventing the Wheel?”, there is a risk that the hype term “Agentic AI” overlooks classical concepts from agent and multi-agent research (e.g., in the work of Wooldridge, Jennings, etc.). This historical perspective helps to critically classify new developments—and shows that modern agents did not arise out of nowhere but are part of a long research tradition.

Botti, V. (2025). Agentic AI and Multiagentic: Are We Reinventing the Wheel?

3.LLM AI Agents – Why Language Models Make the Difference

From Classical Agents to Autonomous Systems

But if AI agents have existed for decades, why are they experiencing such hype right now? The answer lies in another groundbreaking development of recent years: Large Language Models (LLMs).

As Fraunhofer IESE (Siebert & Kelbert, 2024) explains, their strength is based on a special architecture: language is broken down into tokens, translated into semantic vectors, and further processed using probabilities. This is precisely what gives AI agents the flexibility that today makes them such powerful digital actors.

Language models behind systems like ChatGPT or MyGPT have revolutionized how computers interact with people. For the first time, machines can not only understand and generate language but also analyze complex contexts, interpret instructions, and draw conclusions from which they derive actions.

Earlier agents had to be painstakingly programmed or extensively trained for every possible situation. Simple rule sets determined how a system should respond to a particular input. As a result, classical agents were highly specialized—reliable in clearly defined scenarios but hardly adaptable.

Siebert, J., & Kelbert, P. (2024, 17. Juni). Wie funktionieren LLMs? Ein Blick ins Innere großer Sprachmodelle. Fraunhofer IESE

LLMs as the “Brain” of Modern AI Agents

LLMs have largely removed these limitations. Trained on huge datasets, they can recognize patterns, meanings, and relationships between concepts. With only a few additional instructions (“prompts”), they are able to plan, structure, and carry out complex tasks without all steps having to be specified in advance.

In modern AI agents, LLMs take on the role of “thinking.” They evaluate situations, propose strategies, and can autonomously combine action steps.

A simple example illustrates the principle: an LLM-based calendar agent receives the goal of finding a common appointment for five people. It independently searches their calendars, detects conflicts, proposes alternatives, and finally sends an invitation with prepared materials—without a human having to specify every intermediate step.

This ability to plan and act autonomously makes LLM AI agents so powerful and explains why they are currently at the center of attention.

Technically, they connect two worlds:

- the classical agent architecture (perception, decision, action), and

- the cognitive flexibility of language models for complex tasks.

So when people talk about AI agents today, in most cases they mean precisely this new generation of LLM AI agents—systems that are controlled via natural language, act autonomously, and are able to take over entire digital work processes.

In what follows, we therefore consider exclusively these LLM-based agents and show why they are regarded as a central building block of the next wave of automation.

4. Why Are AI Agents Becoming So Relevant for Companies?

AI agents fascinate because they accomplish something previously reserved for humans: autonomous, goal-oriented action. They do not just perform tasks according to fixed rules; they can decide for themselves how best to achieve a goal.

LLMs as the “Brain” of Modern AI Agents

This capability is the source of their enormous potential. Nearly every digital task carried out by people today—from reviewing emails to preparing reports to organizing meetings—can theoretically also be taken over by an AI agent. And around the clock, without breaks, with consistent quality, and in a fraction of the time.

For companies, this opens up far-reaching opportunities. AI agents can:

- Automate routine work, such as creating, sorting, and summarizing documents,

- Link information and knowledge more efficiently, e.g., by bringing together relevant content from different data sources,

- Speed up processes, for example by autonomously preparing projects, proposals, or presentations,

- Relieve employees so they can focus on creative, strategic, or interpersonal tasks.

An Illustrative Example:

A sales agent could:

- automatically prioritize incoming customer inquiries,

- retrieve relevant information from the CRM system,

- generate personalized draft responses,

- and forward open points to the responsible employee.

This combination of autonomy and collaboration makes AI agents one of the most promising tools of the coming years.

Collaboration Between Humans & Machines

At the same time, the human role is changing. While classical automation primarily targeted efficiency and repeatability, agentic systems focus on cooperation between humans and machines. Humans define goals and constraints; agents take over operational execution. This combination of autonomy and collaboration makes AI agents one of the most promising tools of the coming years.

5. Where Do Challenges and Risks of AI Agents Lie?

The possibilities described above raise a whole series of technical, philosophical, moral, legal, and social questions that can hardly be covered here. The most immediately relevant challenges are technical and legal in nature.

Technical Limits (Hallucinations, Wrong Decisions)

LLMs are ultimately computer systems that work with probabilities and can therefore deliver unexpected and incorrect answers—so-called hallucinations. Despite all technical progress, this remains an unsolved (and perhaps inherent) problem. While this is an annoyance in a chat with an LLM, it can have devastating consequences in an AI agent that can autonomously make far-reaching decisions—from inappropriate emails to incorrect orders worth millions to risks to life and limb.

This directly leads to legal and compliance problems: who is responsible for a system that acts autonomously and causes damage?

Why Human Oversight Remains Indispensable

For these reasons, fully autonomous operation of AI agents with maximum freedom is currently not possible or not desirable. To nevertheless harness the enormous potential, certain safety mechanisms are required that on the one hand give the AI agent as much freedom as possible to fulfill the required tasks and on the other hand keep damage as small as possible in the worst case. Intensive practical and theoretical research in this area now enables powerful AI agents—but still with human oversight.

6. RAG & Agentic RAG – AI Agents in Practice

A particularly useful and well-controllable application of AI agents can be found in RAG systems. To understand this, it helps to look at how LLMs are used.

Classic RAG vs. Modern RAG

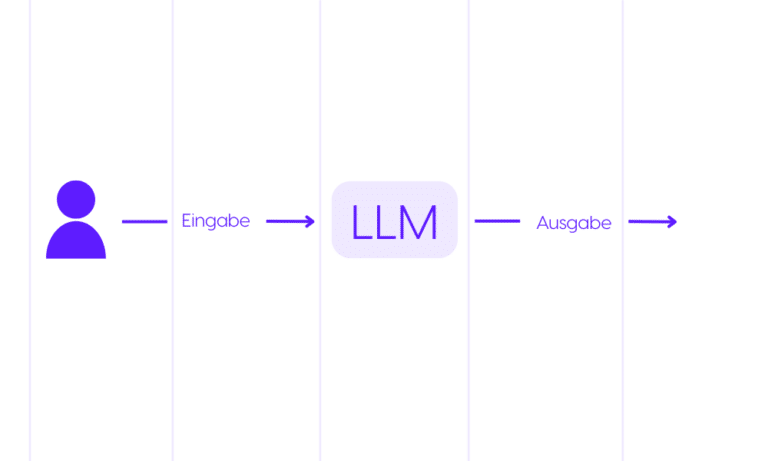

At the beginning, i.e., at the end of 2022, LLMs were primarily used as simple chatbots—above all ChatGPT. That is, a user input was converted directly into an answer by the LLM. Impressive as that is, it soon hit limits because LLMs are subject to inherent knowledge constraints. LLMs are trained on a very large but time-bounded dataset. Events that took place after training are, in principle, unknown to an LLM (“Who won the election yesterday?”).

In addition, the training dataset does not include company-internal or other non-public data. Questions such as “What is the rule on compensatory time in my department?” therefore lead to no answer or to no useful answer.

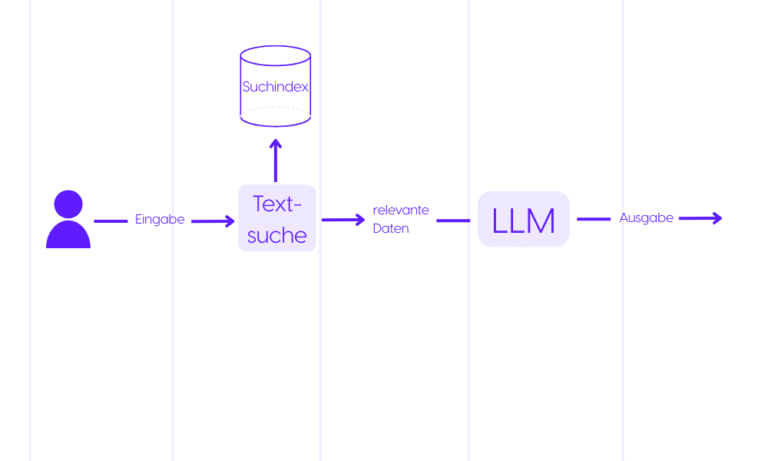

Out of this need arose “classic RAG,” in which the LLM is coupled with your data store. RAG stands for Retrieval-Augmented Generation and means that before the LLM generates an answer, relevant data from the data store (in many cases via text search) is retrieved and, in addition to the question, passed to the LLM as supporting context to generate an answer. This makes time-current knowledge or internal company knowledge accessible to the LLM.

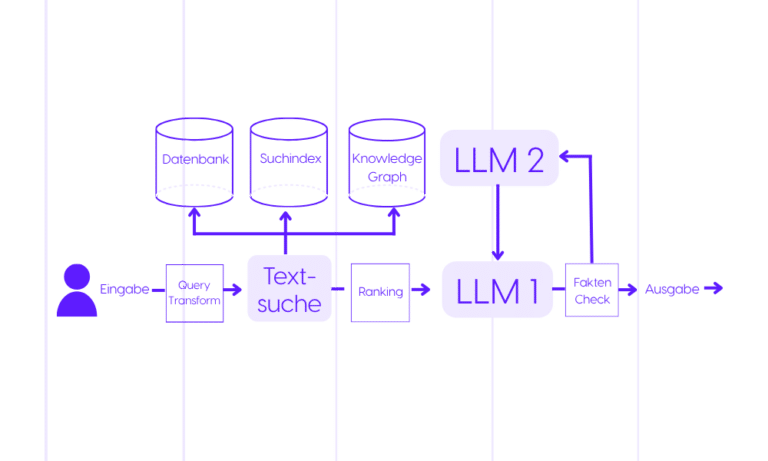

Over time, many additional modules were developed and added to this RAG system, leading to “modern RAG.” Here, many different data stores, LLMs, and other modules are executed to arrive at the best possible answer. This happens rigidly in the sense that from the user input to the final answer every module is run in a fixed order. That means both for the simplest inputs (“please,” “thanks,” …) and for more complex ones (“Summarize all documents assigned to person A that have not been edited in 2 years.”) the same modules run in the same order—over-engineered in the simple case and possibly under-engineered in the complex case.

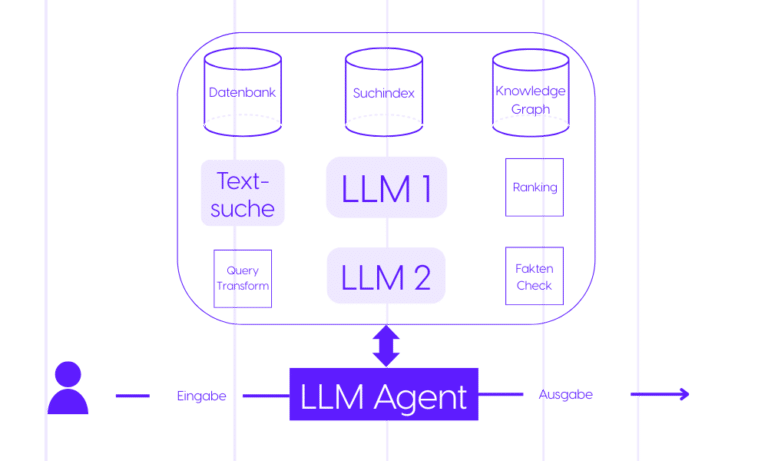

Agentic RAG as the Next Stage of Automation

This led to “Agentic RAG,” in which an AI agent (LLM agent) controls all modules. The agent receives the user input and the available modules as its environment and executes them step by step in the order needed to reach its goal (to generate the best possible answer). This allows it to respond flexibly and autonomously to different user inputs: simple inputs lead to simple workflows; more complex inputs lead to more complex workflows. The AI agent has access to a toolbox where each tool performs a clearly defined task that can be combined with other tools. The agent calls the relevant tools for the respective input in the right order and compiles the results into a coherent answer for the user.

7. Business Use Cases for AI Agents: MyGPT

MyGPT, as a privacy-secure RAG system, has relied on AI agents in parts from the very beginning, which are allowed to act more or less autonomously depending on user preference. Users can specify more or less strict constraints within which the AI agent may act freely. This ensures that, depending on the criticality of the use case, the full potential of an autonomous system can be utilized while maintaining all compliance policies.

Knowledge Management & Document Automation

MyGPT, as a privacy-secure RAG system, has relied on AI agents in parts from the very beginning, which are allowed to act more or less autonomously depending on user preference. Users can specify more or less strict constraints within which the AI agent may act freely. This ensures that, depending on the criticality of the use case, the full potential of an autonomous system can be utilized while maintaining all compliance policies.

H3: Knowledge Management & Document Automation

A central place in MyGPT where an AI agent is used is the selection and querying of different knowledge bases.

MyGPT supports the logical grouping of data into knowledge bases. Depending on the data type, different types of queries are available: if they are natural texts in PDF documents, a full-text or semantic search can find the matching parts; if they are Excel spreadsheets, formula-like queries can be performed (“What is the difference between columns A and D?”). In addition, many other types of queries are available, e.g., a structured search in the table of contents of all data or a search on the web.

This leads to a multitude of ways to reach relevant information for a user question. To answer the user input as well as possible, the appropriate query in the appropriate knowledge base must be selected dynamically depending on the content. Agentic RAG helps to include neither too much nor too little data in order to handle the respective use case optimally.

Concrete Example:

If the user, for example, asks for up-to-date information, static knowledge bases are ignored and a web search is performed directly. If, on the other hand, the question concerns internal work instructions, the table of contents of the relevant knowledge bases is searched first and, based on the result, the relevant document is retrieved.

The AI agent in MyGPT always respects the user-defined restrictions, i.e., if certain permissions are not available for certain data or if web search is not permitted for the agent, the system attempts to generate the best possible answer without the help of these data or modules—within the scope of what is allowed.

Outlook and Conclusion

As with many hype topics, it is also difficult with AI agents to find the right balance between inflated expectations and pessimism. In principle, AI agents offer unprecedented potential in the automation of digital work processes. At the same time, some use cases for AI agents already hit technical limitations—especially hallucinations.

A real-world example shows how close frustration and opportunity are in this topic:

Microsoft launched a pilot project in which AI agents take on tasks in software development. Despite impressive performance in implementing desired features, smaller errors were introduced—whereupon human developers entered into a discussion with the AI agent, instructing it to fix the errors. A back-and-forth ensued, with each side (AI vs. human) convinced it was in the right.

MyGPT therefore relies on a hybrid approach—humans and AI “hand in hand.” Human users and administrators of MyGPT define the constraints within which AI agents are allowed to move freely. Depending on the use case, these constraints can be more or less strict.

AI agents mark the next logical step in the evolution of generative AI: from reactive language models to autonomous, goal-oriented systems. The challenge is to combine the enormous potential of this technology with human expertise responsibly.

Companies that deploy Agentic AI strategically will not only work more efficiently but will also establish new forms of collaboration between humans and machines. This is precisely where the future lies: not in complete automation, but in the intelligent coordination of AI agents and human knowledge.

With this understanding, MyGPT is consistently evolving its platform—as a tool that enables organizations to use the benefits of AI agents productively, securely, and transparently.

Learn more about MyGPT

Want more details about the implementation process?

Download our MyGPT Implementation Guide for free – including a step-by-step setup guide and practical use case examples.

Initial Consultation – Free & Non-Binding

How could AI make your workday easier?

You want to use AI effectively in your company but aren’t quite sure how? Or maybe you already have an idea and are looking for the right solution? Let’s simply talk about it.

In a free initial consultation, we’ll explore together what makes sense for your business – with no sales pressure, but plenty of hands-on experience from real-world projects.

Diese Unternehmen vertrauen

bereits auf Leftshift One

Häufig gestellte Fragen

Ein KI-Agent ist ein Computersystem, das seine Umgebung wahrnimmt, Entscheidungen trifft und handelt, um ein Ziel zu erreichen. Beispiele sind selbstfahrende Autos, digitale Assistenten oder Einkaufsagenten.

Chatbots reagieren meist nur auf einzelne Eingaben. KI-Agenten hingegen können eigenständig planen, mehrere Schritte kombinieren und komplexe Aufgaben erledigen. Sie arbeiten nach dem Prinzip Wahrnehmen – Entscheiden – Handeln.

Agentic AI beschreibt die neue Generation von KI-Agenten, die auf Large Language Models (LLMs) basieren. Diese Systeme nutzen natürliche Sprache, um Aufgaben zu verstehen, flexibel zu reagieren und Arbeitsprozesse zu automatisieren.

Unternehmen profitieren von KI-Agenten durch Zeitersparnis, Automatisierung von Routineaufgaben, besseres Wissensmanagement und höhere Effizienz. Mitarbeiter können sich dadurch stärker auf kreative und strategische Tätigkeiten konzentrieren.

Herausforderungen sind Halluzinationen (falsche Antworten), rechtliche Unsicherheiten und die Notwendigkeit menschlicher Kontrolle. Vollständig autonome Agenten sind aktuell noch riskant, daher empfiehlt sich ein hybrider Ansatz.

Ein Agentic RAG (Retrieval Augmented Generation) kombiniert LLMs mit Datenspeichern. Der KI-Agent entscheidet flexibel, welche Datenquellen oder Tools er nutzen muss, um die bestmögliche Antwort zu generieren.

MyGPT integriert KI-Agenten in seine RAG-Architektur. Nutzer können festlegen, wie autonom die Agenten handeln dürfen. So lassen sich datenschutzsicher Informationen aus Wissensbasen, Dokumenten oder Webquellen effizient verarbeiten.